Timing Struggles in WebAudio

In this first devlog I’ll be talking about the challenges of audio timing on the web and keeping our virtual drummers happy 🥁

Timing Struggles

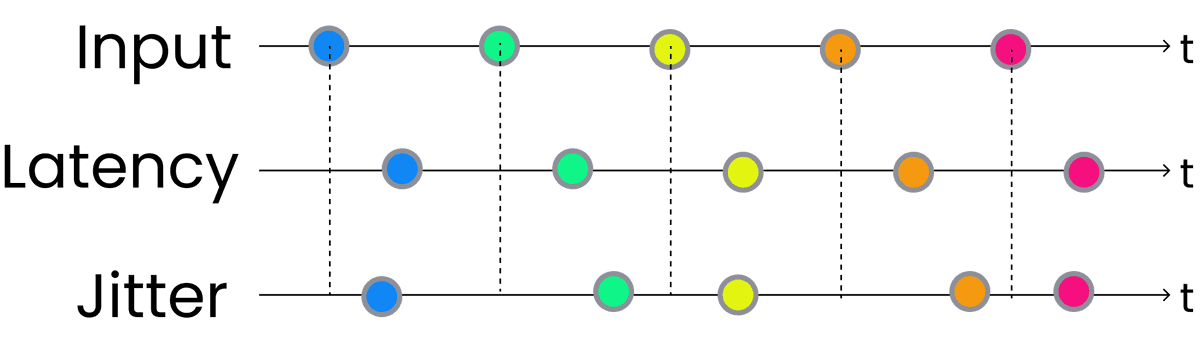

Every musician has their own sense of time and we want to capture that as faithfully as possible. In Dragon Drummer the player is triggering a realtime drum sampler: audio timing issues from latency and jitter can easily ruin their groove.

Latency

Latency is the delay between when the player hits a key and when they hear the sound. Musicians are used to small amounts of latency from the time it takes sound to travel among other things. Unfortunately computer audio systems can introduce additional latency especially if not designed for realtime audio.

What’s a acceptable amount of latency? I try and shoot for sub 10ms when working with dedicated audio software. 20/30ms is noticeable but workable, higher than that becomes a struggle. (For reference a sixteenth note at 120bpm is 125ms long, imagine having to play everything a sixteenth note early!).

Jitter

So far we’ve been assuming a fixed delay between input and sound. Digital systems can introduce jitter: random variations in that delay. Jitter is arguably worse since i’ts unpredictable and musicians can’t compensate for it.

WebAudio

WebAudio is an API for audio processing that is now supported by most browsers. WebAudio lets us schedule audio events that are accurate to the sample. With WebAudio we can sync audio to the same precision as professional DAWS like Logic and Protools. Let’s see how WebAudio handles our timing problems!

Addressing Latency in WebAudio

Sad to say we are at the mercy of browsers, hardware, and drivers for latency. Some OSes have terrible latency out of the box and there’s nothing we can do. Even if the player is audio savvy and installs a dedicated audio driver like ASIO to reduce latency we don’t have access to it through the WebAudio API. Fortunately things seem to be getting a little better so crossing my fingers low latency audio becomes a priority on all OSes. WebAudio does give you one knob you can use to try and reduce latency, the latency hint. It’s worth setting but ultimately the browser decides if it will/can respect it.

Addressing Jitter in WebAudio

Thankfully we can reduce jitter, but only at the cost of increased latency. Life is full of tradeoffs! First let’s see where jitter can creep up:

Jitter from Event Loop

Here’s some code that plays a sound whenever the user presses a key.

//pseudocode

document.addEventListener('keydown', (event) => {

playSound();

});It might appear that playSound() is run immediately when the browser detects the keypress but the Javascript event loop doesn’t guarantee that. In short when the browser detects the keypress, it adds our callback to a queue to be processed eventually. If something else is in the queue that needs to run, our callback will have to wait.

Example: Suppose our game runs at 60fps. Every 16.6ms a function render() is called to draw to the screen. Behind the scenes our render() function is pushed to the queue every 16.6ms. If we happen to press a key during the execution of our render() function, playSound() will have to wait. We can’t control when the user is going to press the key, so how long we wait is random. Jitter is introduced!

Jitter from Audio Buffer Size

Jitter is also introduced by the audio buffer size. To keep our speaker happy we have to feed a new amplitude value 44100 times a second (or more depending on the sample rate). Rather than feeding each sample individually, the audio driver requests batches of samples at a time, say 256 of them. Higher buffer sizes give us more breathing room to prepare a batch but introduce latency since the entire buffer must be ready before sendoff to the driver. It also can introduce jitter because if we’re asked to immediately play some audio, our audio will be effectively rounded to the nearest buffer.

Fixing Jitter

Jitter arises when we ask the system to do something it can’t do immediately. If we want to make sure something happens at a certain time, in life and in audio processing, we should give a heads up! We can fix jitter by introducing a delay and allowing WebAudio to schedule a sound sometime in the future. We take advantage of the event time stamp which reports the actual time accurate to microseconds the key was pressed and schedule the the sound some fixed time later. (In reality WebAudio runs on a separate clock than the one event.timeStamp runs on so we have to align those clocks too).

//pseudocode

const LOOK_AHEAD_TIME = 0.010;

document.addEventListener('keydown', (event) => {

playSoundAtTime(event.timeStamp + LOOK_AHEAD_TIME);

});As long as our LOOK_AHEAD_TIME is greater than the maximum jitter we expect, we’re good to go! As we increase LOOK_AHEAD_TIME though we also increase latency so some tuning is required.

Worth?

Addressing jitter requires additional latency (not to mention code complexity!) Currently I have jitter compensation turned off and no one has complained yet. I also haven’t run the numbers to see what kind of jitter we’re dealing with. Seems like a good candidate for AB testing…

Audio is a struggle

WebAudio (and JS in general) are IMO fantastic for prototyping so I’m gonna stick with it for now. Eventually might have to port to native for more reliable audio though 😩

October 20, 2020, by Larry Wang